The Face API which is part of the Microsoft Cognitive Services helps to identify and detect faces. It can also be used to find similar faces, to verify if two images contain the same person and you can also train the service to improve the identification of people. In this blog post, I’ll just use the detect service which detects faces and shows the age, gender, emotions and other data of the detected face.

Prerequisites: Create the Face API Service in Azure

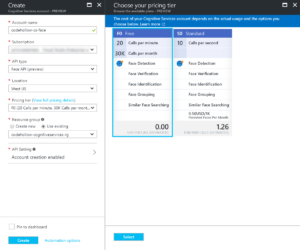

As all Microsoft cognitive services, you can also create the face API service in Azure via the portal. It is part of the “Cognitive Services APIs”, so just search for it and create it. Select the Face API as the type of the cognitive service and check the pricing options:

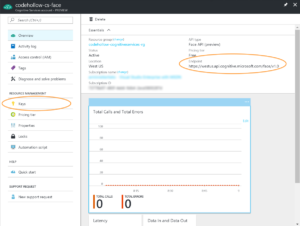

After the creation, we just need to note the API key and the API endpoint. Navigate to the service in the Azure portal and you will see the endpoint url in the overview. It’s currently only available in west-us, so the endpoint url will be: https://westus.api.cognitive.microsoft.com/face/v1.0

The keys can be found in “Keys” – just copy one of them and use it later in the application:

Using the Face API with C#

The face API can be accessed via C# with a simple HttpClient or with the NuGet package Microsoft.ProjectOxford.Face. My first sample will use the HttpClient just to show how it works. It also returns by sure all data that is currently available. The NuGet package is not fully up to date, so it for example does not contain the emotions.

Access Face API with C# and HttpClient

In the following sample, I’ll just send an image to the face API and show the JSON output in the console. If you want to work with the data, then you can use Newtonsoft.Json with JObject.Parse, or as already stated, the NuGet package which is described later in this post:

using System;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

namespace AzureCognitiveService.Face

{

class Program

{

private static string APIKEY = "[APIKEY]";

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Face API");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

Task.Run(async () =>

{

var image = System.IO.File.ReadAllBytes(path);

var output = await DetectFaces(image);

Console.WriteLine(output);

}).Wait();

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task<string> DetectFaces(byte[] image)

{

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

string requestParams = "returnFaceId=true&returnFaceLandmarks=true&returnFaceAttributes=age,gender,headPose,smile,facialHair,glasses,emotion";

string uri = "https://westus.api.cognitive.microsoft.com/face/v1.0/detect?" + requestParams;

using (var content = new ByteArrayContent(image))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var response = await client.PostAsync(uri, content);

var jsonText = await response.Content.ReadAsStringAsync();

return jsonText;

}

}

}

}

I tested it with the following image, and got the following output:

output.json

[{

"faceId": "95d01a0d-1f2a-4855-ab2b-881f9b4387ef",

"faceRectangle": {

"top": 332,

"left": 709,

"width": 48,

"height": 48

},

"faceLandmarks": {

"pupilLeft": {

"x": 723.6,

"y": 344.7

},

"pupilRight": {

"x": 744.2,

"y": 346.3

},

"noseTip": {

"x": 732.8,

"y": 357.6

},

"mouthLeft": {

"x": 720.7,

"y": 365.6

},

"mouthRight": {

"x": 743.7,

"y": 367.1

},

"eyebrowLeftOuter": {

"x": 715.8,

"y": 341.8

},

"eyebrowLeftInner": {

"x": 728.3,

"y": 341.2

},

"eyeLeftOuter": {

"x": 720.4,

"y": 345.1

},

"eyeLeftTop": {

"x": 723.3,

"y": 344.5

},

"eyeLeftBottom": {

"x": 723.3,

"y": 345.8

},

"eyeLeftInner": {

"x": 726.3,

"y": 345.5

},

"eyebrowRightInner": {

"x": 738.2,

"y": 342.2

},

"eyebrowRightOuter": {

"x": 752.0,

"y": 342.8

},

"eyeRightInner": {

"x": 740.5,

"y": 346.3

},

"eyeRightTop": {

"x": 743.6,

"y": 345.7

},

"eyeRightBottom": {

"x": 743.3,

"y": 347.1

},

"eyeRightOuter": {

"x": 746.4,

"y": 347.0

},

"noseRootLeft": {

"x": 730.5,

"y": 346.3

},

"noseRootRight": {

"x": 736.4,

"y": 346.5

},

"noseLeftAlarTop": {

"x": 728.3,

"y": 353.3

},

"noseRightAlarTop": {

"x": 738.3,

"y": 353.7

},

"noseLeftAlarOutTip": {

"x": 726.2,

"y": 356.6

},

"noseRightAlarOutTip": {

"x": 739.8,

"y": 357.7

},

"upperLipTop": {

"x": 733.0,

"y": 365.1

},

"upperLipBottom": {

"x": 732.7,

"y": 366.4

},

"underLipTop": {

"x": 731.7,

"y": 370.6

},

"underLipBottom": {

"x": 731.4,

"y": 373.1

}

},

"faceAttributes": {

"smile": 1.0,

"headPose": {

"pitch": 0.0,

"roll": 3.2,

"yaw": -0.5

},

"gender": "male",

"age": 34.6,

"facialHair": {

"moustache": 0.0,

"beard": 0.2,

"sideburns": 0.2

},

"glasses": "ReadingGlasses",

"emotion": {

"anger": 0.0,

"contempt": 0.0,

"disgust": 0.0,

"fear": 0.0,

"happiness": 1.0,

"neutral": 0.0,

"sadness": 0.0,

"surprise": 0.0

}

}

}

]

It seems like that I look like a 34-year-old man and that my face on the image is the pure happiness (100%). All other emotions are non-existent (0%).

Access Face API with C# and the NuGet package

As already mentioned, there is the NuGet package Microsoft.ProjectOxford.Face which makes it very easy to access the face API. Unfortunately, it does not wrap all properties (emotions), but there are already some commits in the GitHub project (https://github.com/Microsoft/Cognitive-Face-Windows) that will fix that.

Detect faces

This is nearly the same sample as above, but this time I’ll use the NuGet package. As already mentioned, the package does currently not contain the emotions, that’s why I’ll just show the smile factor:

using Microsoft.ProjectOxford.Face;

using System;

using System.Collections.Generic;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

namespace AzureCognitiveService.Face

{

class Program

{

private static string APIKEY = "[APIKEY]";

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Face API");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

Task.Run(async () =>

{

var faces = await DetectFaces(path);

foreach(var face in faces)

{

Console.WriteLine($"{face.FaceAttributes.Gender}, {face.FaceAttributes.Age}: Smile: {face.FaceAttributes.Smile}");

}

}).Wait();

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task<Microsoft.ProjectOxford.Face.Contract.Face[]> DetectFaces(string path)

{

var client = new FaceServiceClient(APIKEY);

using (System.IO.Stream stream = System.IO.File.OpenRead(path))

{

var data = await client.DetectAsync(stream, true, true, new List<FaceAttributeType>()

{

FaceAttributeType.Age,

FaceAttributeType.Gender,

FaceAttributeType.Glasses,

FaceAttributeType.Smile

});

return data;

}

}

}

}

I got the output:

male, 41,9: Smile: 0,996

for the following image:

In this post, I just used the detect service, but the face API has much more functionality which I’ll probably describe in another blog post. So, stay tuned! 😉

Additional information

Microsoft Face API Overview: https://www.microsoft.com/cognitive-services/en-us/face-api

Microsoft Face API Documentation: https://www.microsoft.com/cognitive-services/en-us/face-api/documentation/overview

Face API Reference: https://westus.dev.cognitive.microsoft.com/docs/services/563879b61984550e40cbbe8d/operations/563879b61984550f30395236

Windows SDK for Microsoft Face API: https://github.com/Microsoft/Cognitive-Face-Windows

No responses yet