The Computer Vision API which is part of the Microsoft Cognitive Services and helps to analyze images, retrieve information from images or even to modify them a bit. It currently supports the following cases:

- Analyze an Image: Returns categories and tags (e.g. “mountain”, “outdoor”), detects celebrities on the picture, checks if it contains adult or racy content, gives a description of the image, detects faces (e.g. male, 20 years), analyzes colors and the image type.

- Get a thumbnail: returns a thumbnail of the image.

- OCR (optical character recognition): detects text on an image.

- Analyze videos: does the same as analyze images, but for videos.

In this blog post, I’ll use C# to analyze images, to do an OCR and I’ll create a thumbnail.

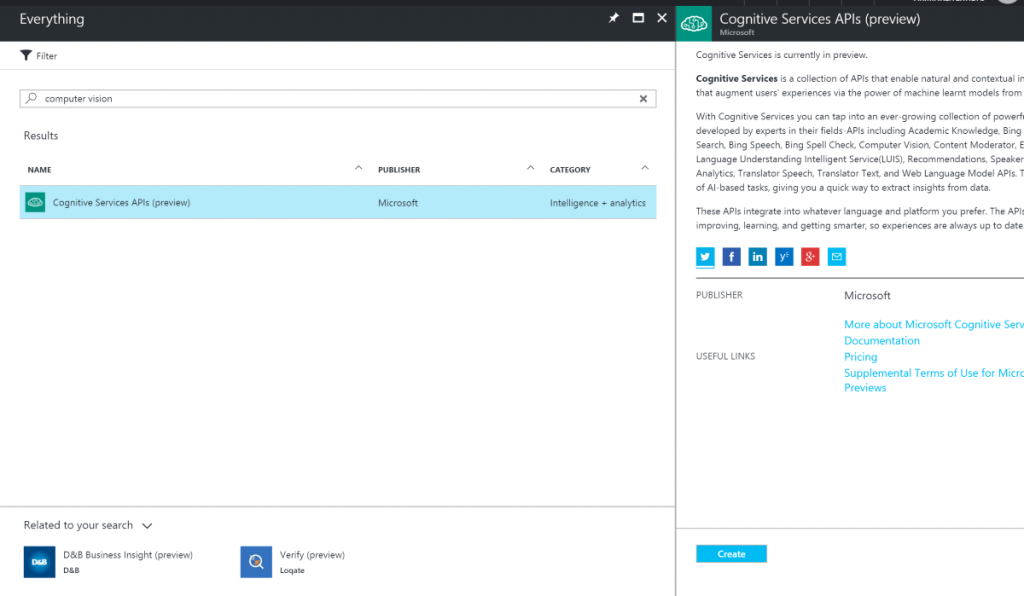

Prerequisites: Create the Computer Vision service in Azure

The first step for using the computer vision API is to create the service. It is part of the “Cognitive Services APIs” and can be created in the Azure portal:

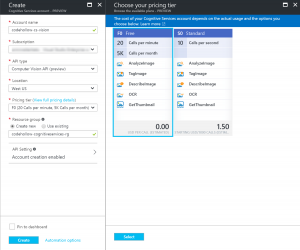

The next step shows the cost options and the features that are part of it. Enter the required information and create the service. The API type shows us all available cognitive services:

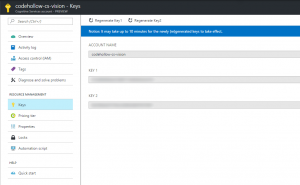

The final step is to go to the newly created service and get the endpoint address and the API key. The service is currently only available in westus, so the endpoint is the same for everyone:

https://westus.api.cognitive.microsoft.com/vision/v1.0

The key can be found in the keys section. The key must be provided with each API call and therefore we need it later in our code:

Using the Computer Vision API with C#

The computer vision API can be accessed via C# with a simple HttpClient. It keeps the solution clean and it’s a good way if you just have a small task to do (e.g. getting categories of the image). The other way is to use the NuGet package provided by Microsoft (described later). As all cognitive service APIs, the NuGet package starts with Microsoft.ProjectOxford. In this blog post, I’ll use both ways, but I’ll start with the HttpClient:

Analyze an image with C#

The following code sample shows how to read all information from the analysis service, but it uses only the tags information and returns them as a string array:

using Newtonsoft.Json.Linq;

using System;

using System.IO;

using System.Linq;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

namespace AzureCognitiveServices.ComputerVision

{

class Program

{

private static string APIKEY = "APIKEY";

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Computer Vision.");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

var image = File.ReadAllBytes(path);

var tags = GetImageTags(image);

tags.Wait();

Console.WriteLine(string.Join(", ", tags.Result));

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task<string[]> GetImageTags(byte[] image)

{

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

string requestParameters = "visualFeatures=Categories,Tags,Description,Faces,ImageType,Color,Adult&language=en";

string uri = "https://westus.api.cognitive.microsoft.com/vision/v1.0/analyze?" + requestParameters;

using (var content = new ByteArrayContent(image))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var response = await client.PostAsync(uri, content);

var jsonText = await response.Content.ReadAsStringAsync();

Console.WriteLine(jsonText);

var json = JObject.Parse(jsonText);

var captions = json.SelectToken("description.tags");

return captions.Select(x => x.ToString()).ToArray();

}

}

}

}

Let’s for example look at the following image (which shows the shadow of me climbing a mountain) and the json output of the API:

output.json

{

"categories": [{

"name": "outdoor_mountain",

"score": 0.64453125

}, {

"name": "outdoor_stonerock",

"score": 0.26953125

}

],

"adult": {

"isAdultContent": false,

"isRacyContent": false,

"adultScore": 0.036954909563064575,

"racyScore": 0.063448332250118256

},

"tags": [{

"name": "rock",

"confidence": 0.99913871288299561

}, {

"name": "outdoor",

"confidence": 0.99532252550125122

}, {

"name": "mountain",

"confidence": 0.99497038125991821

}, {

"name": "rocky",

"confidence": 0.97868114709854126

}, {

"name": "nature",

"confidence": 0.95584964752197266

}, {

"name": "valley",

"confidence": 0.89927709102630615

}, {

"name": "canyon",

"confidence": 0.73100519180297852

}, {

"name": "hill",

"confidence": 0.60852372646331787

}, {

"name": "hillside",

"confidence": 0.55912631750106812

}

],

"description": {

"tags": ["rock", "outdoor", "mountain", "rocky", "nature", "valley", "hill", "hillside", "grass", "side", "small", "standing", "man", "top", "slope", "covered", "large", "sheep", "grazing", "field", "white", "group", "riding"],

"captions": [{

"text": "a close up of a rocky hill",

"confidence": 0.51952168643870456

}

]

},

"requestId": "092611f4-a903-47ea-9adb-e53f0cc09d8f",

"metadata": {

"width": 1200,

"height": 675,

"format": "Png"

},

"faces": [],

"color": {

"dominantColorForeground": "Grey",

"dominantColorBackground": "Black",

"dominantColors": ["Black", "Grey"],

"accentColor": "0371C8",

"isBWImg": false

},

"imageType": {

"clipArtType": 0,

"lineDrawingType": 0

}

}

…and another one. That’s me standing on a mountain near the place where I lived for a long time. The API also tells me that I look like a 34-year-old man (which is a far better than the age of 42 that it gives me for the picture at my about me page):

output.json

{

"categories": [{

"name": "outdoor_mountain",

"score": 0.734375

}

],

"adult": {

"isAdultContent": false,

"isRacyContent": false,

"adultScore": 0.012527346611022949,

"racyScore": 0.012729388661682606

},

"tags": [{

"name": "sky",

"confidence": 0.999982476234436

}, {

"name": "outdoor",

"confidence": 0.99960440397262573

}, {

"name": "mountain",

"confidence": 0.99661022424697876

}, {

"name": "person",

"confidence": 0.991381824016571

}, {

"name": "man",

"confidence": 0.98152691125869751

}, {

"name": "nature",

"confidence": 0.9488060474395752

}, {

"name": "standing",

"confidence": 0.94170475006103516

}, {

"name": "hill",

"confidence": 0.70216524600982666

}, {

"name": "posing",

"confidence": 0.6068764328956604

}, {

"name": "overlooking",

"confidence": 0.4831596314907074

}, {

"name": "hillside",

"confidence": 0.19828797876834869

}, {

"name": "highland",

"confidence": 0.17010223865509033

}, {

"name": "distance",

"confidence": 0.15806387364864349

}

],

"description": {

"tags": ["outdoor", "mountain", "person", "man", "nature", "standing", "grass", "top", "snow", "hill", "holding", "posing", "wearing", "field", "overlooking", "flying", "background", "photo", "skiing", "camera", "slope", "covered", "hillside", "woman", "rock", "young", "red", "airplane"],

"captions": [{

"text": "a man standing in front of a mountain",

"confidence": 0.81513871904031676

}

]

},

"requestId": "2d605ee8-c36a-4150-a160-7e6d79409e63",

"metadata": {

"width": 1200,

"height": 675,

"format": "Png"

},

"faces": [{

"age": 34,

"gender": "Male",

"faceRectangle": {

"left": 709,

"top": 332,

"width": 48,

"height": 48

}

}

],

"color": {

"dominantColorForeground": "Black",

"dominantColorBackground": "White",

"dominantColors": ["White", "Brown"],

"accentColor": "2368A8",

"isBWImg": false

},

"imageType": {

"clipArtType": 0,

"lineDrawingType": 0

}

}

OCR – optical character recognition with C#

Doing an OCR is as easy as using the analyze function. Just call the right url and interpret the result. In the following sample, I just return the json output as text:

public static async Task<string> Ocr(byte[] image)

{

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

string uri = "https://westus.api.cognitive.microsoft.com/vision/v1.0/ocr";

using (var content = new ByteArrayContent(image))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var response = await client.PostAsync(uri, content);

var jsonText = await response.Content.ReadAsStringAsync();

return jsonText;

}

}

Get a thumbnail

At a first glimpse, getting a thumbnail seems to be a service that one doesn’t really need, especially in C# where you can easily create thumbnails. But this service also has the smart cropping feature which is nice, because it recognizes the important parts of the image and creates a good thumbnail. If you turn it on, it recognizes faces on the picture and “focusses” on them. If it’s off, then it just takes the part in the middle of the image.

I took one image and broadened it, so that my face is on the left side of the image. Then I created two thumbnails out of it. One with smart cropping and one without smart cropping. That’s the result:

I also created a thumbnail from one of the images above. This is the result:

The code for creating the thumbnails is:

public static async Task<byte[]> GetThumbnail(byte[] image, int width, int height, bool smartCropping)

{

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

// e.g.: width=200&height=150&smartCropping=true

string requestParameters = $"width={width}&height={height}&smartCropping={smartCropping.ToString()}";

string uri = "https://westus.api.cognitive.microsoft.com/vision/v1.0/generateThumbnail?" + requestParameters;

using (var content = new ByteArrayContent(image))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var response = await client.PostAsync(uri, content);

return await response.Content.ReadAsByteArrayAsync();

}

}

NuGet Package

As written at the beginning, there is a NuGet package available – Microsoft.ProjectOxford.Vision. You can do all described operations with the package, but it’s much easier to access the objects and their properties. So, let’s see how the different services can be used with the NuGet package:

Analyze an image

In the following sample, I analyze the image and show tags with a confidence higher than 90%. I also print the gender and age of recognized faces. For the following image, it gives me the tags person, outdoor, tree, man and one face – Male – 37

using Microsoft.ProjectOxford.Vision;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace AzureCognitiveService.Vision

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Computer Vision.");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

Task.Run(async () => await AnalyzeImage(path)).Wait();

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task AnalyzeImage(string path)

{

var client = new VisionServiceClient("APIKEY");

IEnumerable<VisualFeature> visualFeatures = new VisualFeature[]

{

VisualFeature.Adult,

VisualFeature.Categories,

VisualFeature.Color,

VisualFeature.Description,

VisualFeature.Faces,

VisualFeature.ImageType,

VisualFeature.Tags

};

using (System.IO.Stream stream = System.IO.File.OpenRead(path))

{

var analyzed = await client.AnalyzeImageAsync(stream, visualFeatures);

var tags = from tag in analyzed.Tags where tag.Confidence > 0.9 select tag.Name;

Console.WriteLine(string.Join(", ", tags));

var faces = from face in analyzed.Faces select face.Gender + " - " + face.Age.ToString();

if (faces.Count() > 0)

Console.WriteLine(string.Join(", ", faces));

}

}

}

}

OCR – optical character recognition

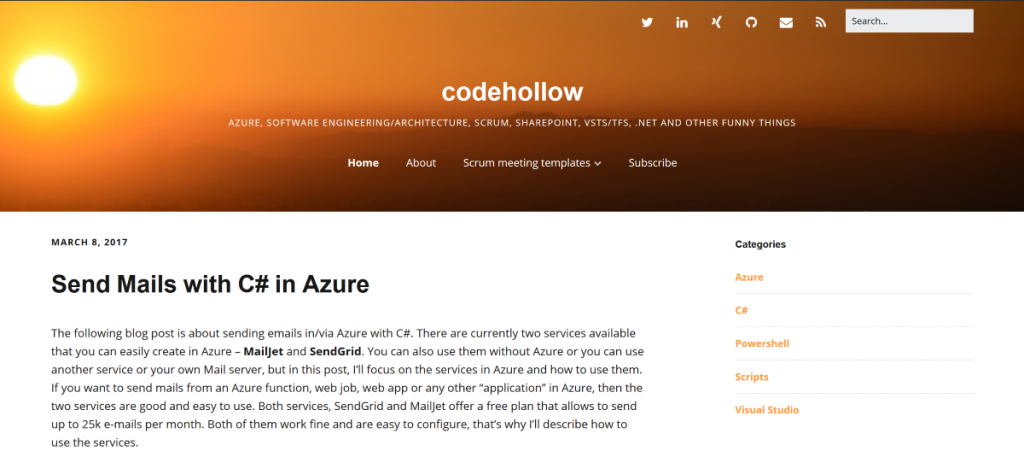

To test the ocr, I took a screenshot of my blog and send it to the OCR service. The screenshot that I used is:

and this is the output:

o Search,_ codehollow AZURE, SOFTWARE ENGINEERING/ARCHITECTURE, SCRUM, SHAREPOINT, vsTS/TFS, .NET AND OTHER FUNNY THINGS Home About Scrum meeting templates v Subscribe MARCH 8, 2017 Send Mails with C# in Azure The following blog post is about sending emails in/via Azure with C#. There are currently two services available that you can easily create in Azure - MailJet and SendGrid. You can also use them without Azure or you can use another service or your own Mail server, but in this post, I'll focus on the services in Azure and how to use them. If you want to send mails from an Azure function, web job, web app or any other &quot;application&quot; in Azure, then the two services are good and easy to use. Both services, SendGrid and MailJet offer a free plan that allows to send up to 25k e-mails per month. Both of them work fine and are easy to configure, that's why I'll describe how to use the services. Categories Azure Powershell sc ripts Visual Studio

And here comes the code for OCR using the NuGet package:

using Microsoft.ProjectOxford.Vision;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

namespace AzureCognitiveService.Vision

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Computer Vision.");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

Task.Run(async () => await Ocr(path)).Wait();

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task Ocr(string path)

{

var client = new VisionServiceClient("APIKEY");

using (System.IO.Stream stream = System.IO.File.OpenRead(path))

{

var ocr = await client.RecognizeTextAsync(stream);

var texts = from region in ocr.Regions

from line in region.Lines

select line;

foreach(var line in texts)

{

Console.WriteLine(string.Join(" ", line.Words.Select(w => w.Text)));

}

}

}

}

}

Get thumbnail

This is how to get a thumbnail from an image:

using Microsoft.ProjectOxford.Vision;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Threading.Tasks;

namespace AzureCognitiveService.Vision

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Welcome to the Azure Cognitive Services - Computer Vision.");

Console.WriteLine("Please enter the path of the image:");

string path = Console.ReadLine();

Task.Run(async () =>

{

var thumbnail = await GetThumbnail(path);

var newFileName = Path.ChangeExtension(path, "thumb." + Path.GetExtension(path));

File.WriteAllBytes(newFileName, thumbnail);

}).Wait();

Console.WriteLine("Press key to exit!");

Console.ReadKey();

}

public static async Task<byte[]> GetThumbnail(string path)

{

var client = new VisionServiceClient("APIKEY");

using (System.IO.Stream stream = System.IO.File.OpenRead(path))

{

var thumbnail = await client.GetThumbnailAsync(stream, 200, 200, true);

return thumbnail;

}

}

}

}

Additional information

Computer Vision API: https://www.microsoft.com/cognitive-services/en-us/computer-vision-api

Computer Vision API Version 1.0: https://www.microsoft.com/cognitive-services/en-us/Computer-Vision-API/documentation

2 Responses

This blog is amazing. Thank you!

You have an error at the part of get the thumbnail of an image. When you call the API constructor, you pass the API key. And it causes an exception. The correct way is to put, also the API URL.